Convexity

In this chapter you'll be introduced to:

- Convex Sets: Understanding the definition of convex sets and identifying convex and non-convex sets.

- Convex Functions: Learning the formal definition of convex functions and how to determine if a function is convex.

- Equivalent Conditions for Convexity: Exploring first-order and second-order conditions that are equivalent to convexity.

- Optimality in Convex Functions: Understanding why any local minimum of a convex function is also a global minimum.

- Application to Linear Regression: Preparing to apply the concepts of convexity to the mean squared error loss function in linear regression.

In the previous chapter, we discussed the linear regression model, where we aimed to find the best-fitting line (or hyperplane) for our data by minimizing the mean squared error (MSE) loss function. To efficiently optimize the MSE loss and guarantee finding the global minimum, we need to understand the concept of convexity in functions and sets. Convexity plays a crucial role in optimization, particularly in machine learning algorithms.

Convex Sets

Definition

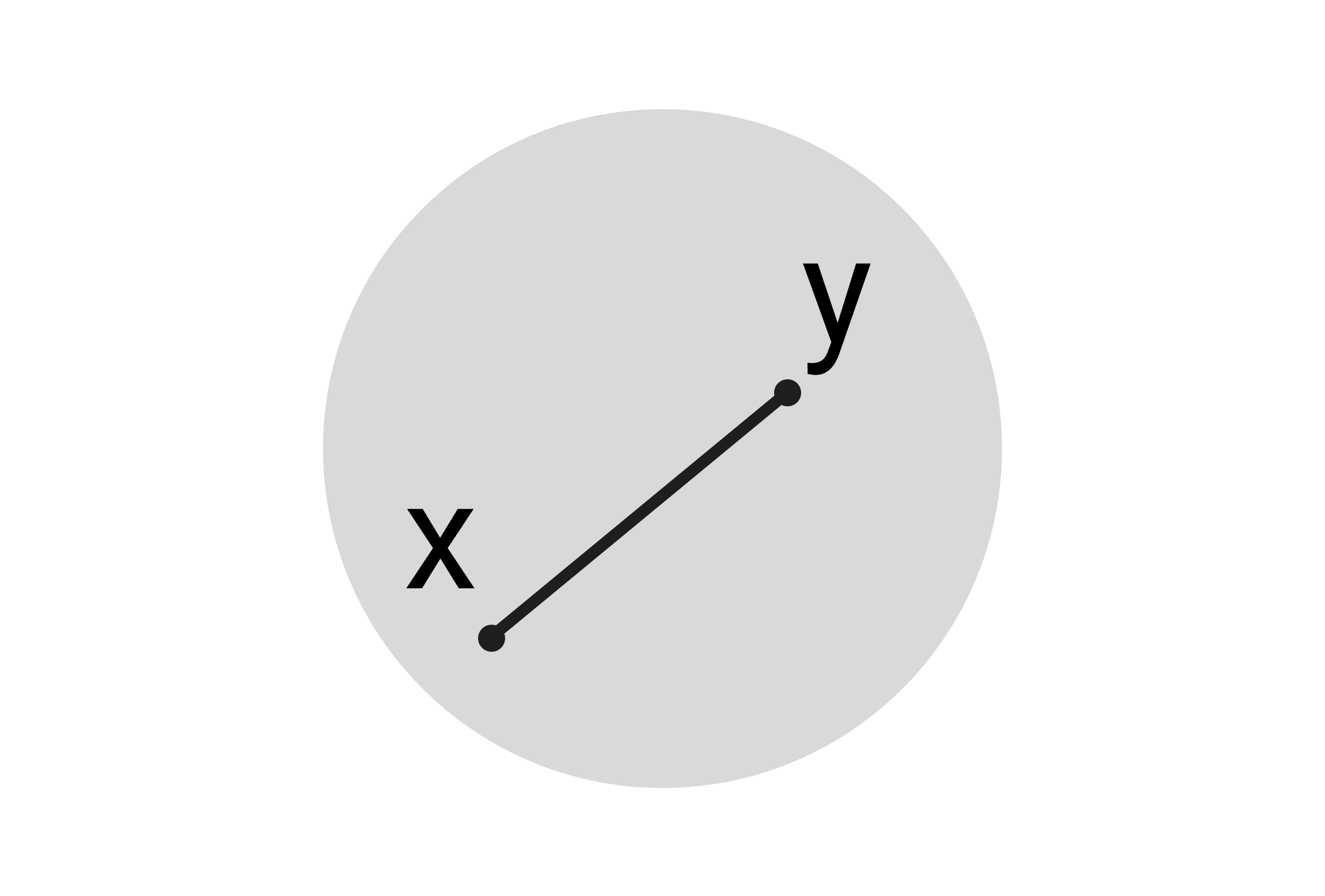

A set is called convex if, for any two points and any where , the following condition holds:

This means that the line segment connecting any two points in the set is entirely contained within the set.

Convex Set

- Solid Circle (Disc): All points inside and on the boundary of a circle in form a convex set.

- Polygon: Any convex polygon (e.g., square, triangle) is a convex set.

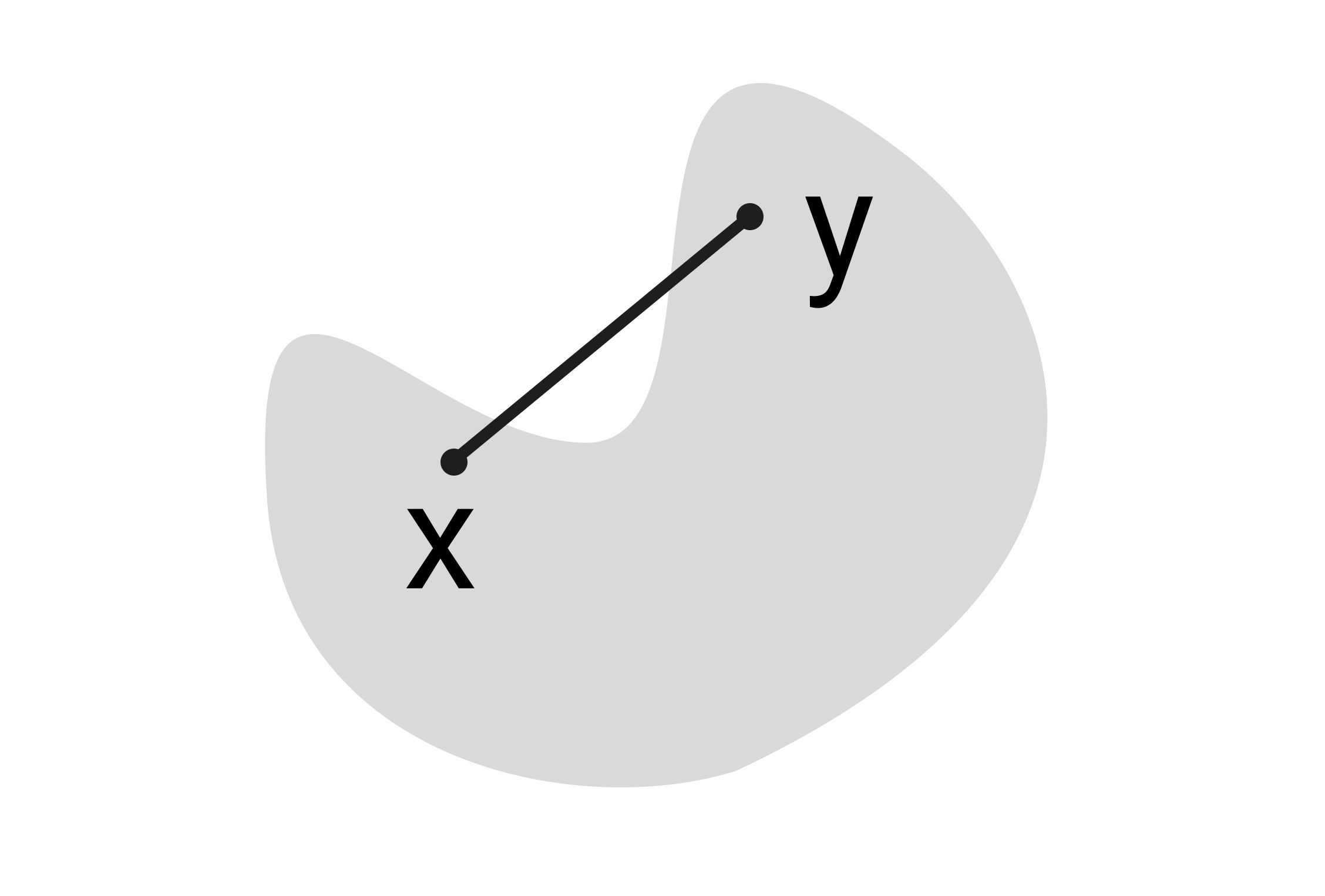

Non-Convex Set

- Shapes with Indentations: A shape like a crescent moon is not convex because some line segments between points in the set will pass outside the set.

- Disconnected Sets: If a set consists of two separate regions (e.g., two distinct circles), it's not convex because the line segment connecting a point from each region lies outside the set.

Convex Functions

Definition

A function is convex if:

-

The domain is a convex set.

-

For any and any where :

This inequality implies that a straight line (or plane) between any two points on the function lies above the function itself.

Interpretation

- Curving Up: Convex functions curve upwards, and the line segment between any two points on the graph of the function lies above the graph.

- Affine Functions: Linear functions are considered convex because they satisfy the convexity condition with equality.

Convex Functions

-

Linear Functions:

- Any linear (affine) function is convex.

- The convexity condition holds with equality.

-

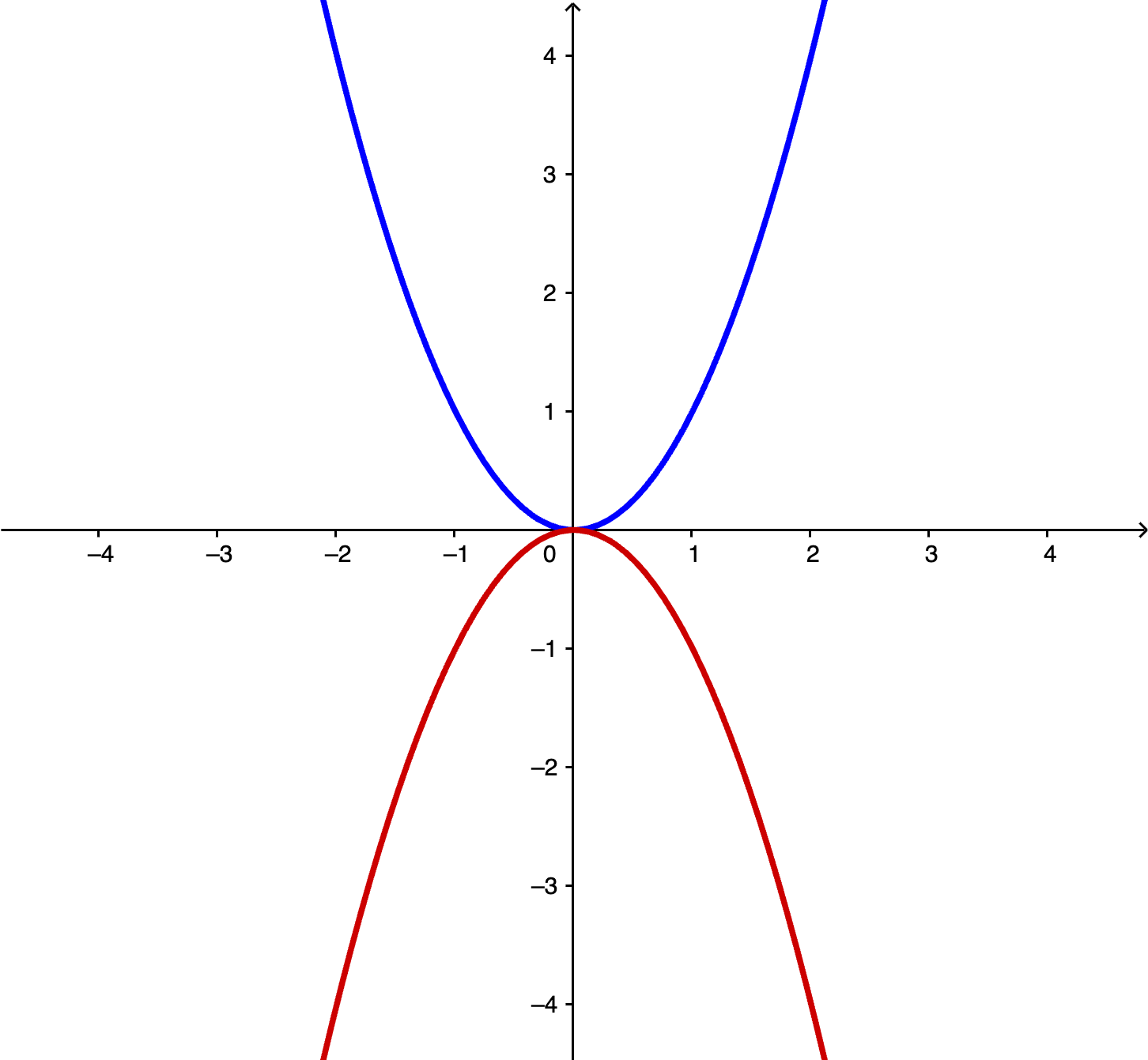

Quadratic Functions:

- The graph curves upwards.

- Satisfies the convexity condition.

Non-Convex Functions

-

Concave Functions:

- The graph curves downwards.

- Does not satisfy the convexity condition.

-

Functions with Discontinuities or Sharp Turns:

- Functions that are not smooth or have points where the convexity condition fails.

Examples:

- Blue Curve: Convex function (e.g., ).

- Red Curve: Non-convex function (e.g., ).

Equivalent Conditions for Convexity

Determining whether a function is convex using the definition can be challenging. Fortunately, there are equivalent conditions that can simplify this process, especially for differentiable functions.

Theorem

Suppose is a twice-differentiable function on a convex set . The following statements are equivalent:

-

(Convexity): is convex.

-

(First-Order Condition): For all :

- Here, is the gradient (vector of partial derivatives) of at .

-

(Second-Order Condition): For all , the Hessian matrix is positive semidefinite.

- A matrix is positive semidefinite if all its eigenvalues are non-negative.

Understanding the Conditions

First-Order Condition

- Geometric Interpretation: The function lies above its first-order Taylor expansion (tangent hyperplane) at any point .

- Implication: The tangent at any point is a global underestimator of the function.

Second-Order Condition

- Hessian Matrix: A square matrix of second-order partial derivatives.

- Positive Semidefinite: Indicates that the function curves upwards in all directions.

Proof Sketches

From First-Order to Convexity (B ⇒ A)

- Goal: Show that the first-order condition implies the convexity condition.

- Approach: Use the first-order condition to derive the convexity inequality by manipulating the expressions.

From Second-Order to First-Order (C ⇒ B)

- Goal: Show that if the Hessian is positive semidefinite, the first-order condition holds.

- Approach: Use Taylor's theorem to expand around and show that the remainder term is non-negative due to the positive semidefinite Hessian.

Optimality in Convex Functions

Global vs. Local Minima

- Global Minimum: A point where for all .

- Local Minimum: A point where for all in some neighborhood around .

Theorem

For a convex function :

- Any local minimum is also a global minimum.

Implications

- Optimization Simplification: When optimizing convex functions, finding a local minimum suffices.

- Guarantees in Optimization Algorithms: Algorithms that converge to a local minimum will also find the global minimum for convex functions.

Proof Outline

- Assumption: Suppose is a local minimum.

- Goal: Show that for all .

- Approach:

- Use the convexity condition to establish an inequality between and .

- Show that any deviation from cannot result in a lower function value.

Application to Linear Regression

Understanding convexity is essential for optimizing the mean squared error (MSE) loss function in linear regression.

Mean Squared Error Loss Function

Given:

-

Hypothesis Class:

-

Training Data:

-

MSE Loss:

Convexity of MSE Loss

- Objective: Show that is a convex function of .

- Importance:

- Ensures that any local minimum of is also a global minimum.

- Simplifies the optimization process for finding the best parameters .